There are a lot of reasons why users might want to root - or ‘jailbreak’ - their devices. Some are not happy to have their phones and tablets pre-loaded with apps that they never asked for and may never want -- that might be just bloatware or, in more sinister cases, spyware.

Others might simply want more control over their own device and its functionalities: to install ad blockers, to play music videos with the screen locked, or to tweak Android Auto. Apple users jailbreak their devices to install unapproved applications via Cydia or to run OpenSSH.

Indeed, there are estimates that some 8.5% of Apple users and around 7.6% of Android users take such steps. But this comes with risks for both users and app developers.

In this piece we’ll cover the risks with Android in particular.

Why getting root is risky

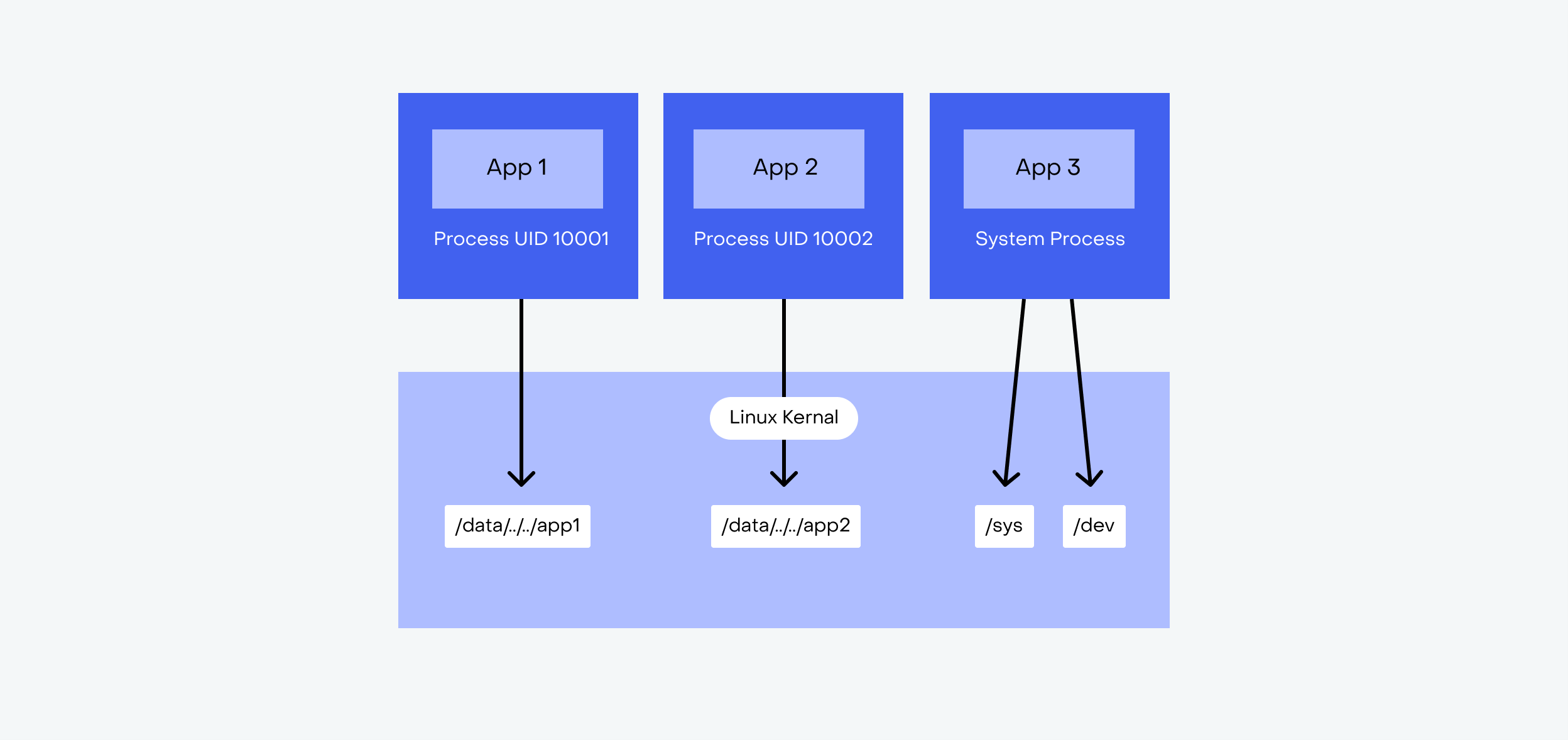

The Android security model is based on the Linux user model. Each application (with one exception) is assigned a Linux user ID and its own sandbox. That means the app runs in a separate process (or group of processes, in some cases) and has its own part of the file system. There is no shared access to other apps’ process memory, and no access to the system folders whatsoever.

Apps are granted permissions both at install time and at runtime. The sandbox model should guarantee that app data is not accessible to any other application on the device.

But the ‘user’ (i.e. the application) with maximum privilege - with root privilege - is able to read all other applications’ data and modify it freely.

So the owner of a phone or tablet decides to root their device, and they have the power to make all the modifications that they want. But this power can also be abused by malicious actors and malicious software.

Let’s explore three different risk scenarios.

Risk Scenario 1 - Malware Infiltrating Sandboxes

The device gets infected with a malicious application (and there are plenty of ways for that to happen). Suddenly, the trojan in this malicious app can take advantage of the root privileges that the user enabled, allowing it to access every folder on the device, including for every other application.

Since the sandbox rule of no shared access no longer applies, the trojan app can now exfiltrate whatever is stored in /data/data/Famous-Banking-App, or in /proc/maps -- runtime data, cached balances, lists of accounts, transaction history -- and report it all to some unknown server. And if security wasn’t a priority for the developers of the Famous Banking App - if they left secrets unencrypted, for example, and chose to store sensitive data on the device - then the consequences for the user (and their bank) could be even more severe.

Without the hijacked root privileges, on the other hand, the trojan app would be left trapped in its own sandbox, unable to access the data in the Famous Banking App’s private folders.

Risk Scenario 2 - Fraudulent Certificates, Man-in-the-Middle Attacks

The same principle applies to network traffic. Almost every app relies on HTTPS certificates to confirm that the server it is communicating with is the legitimate, expected one. The system stores the certificate data and checks that it matches the certificate presented by the server. But on a rooted device, the folders containing the certificate data can be modified, just the same as any other folder.

If a bad actor can create a situation where a new, fraudulent certificate is installed on the user’s device to replace the legitimate one -- for example by using social engineering techniques to get the user to click on a certain link - then any HTTPS checks are pointless. And once the certificate is in place, traffic from the device can be redirected to any server in the world, intercepting all requests and replacing all responses. To the end user everything seems to be working as expected as they unwittingly send off their data - and it is sensitive data above all that is shared like this - to be harvested from a mysterious server.

This type of malicious certificate installation is much simpler on a rooted device: the user’s only involvement is clicking the link to download the fraudulent certificate.

Risk Scenario 3 - System API Hijacking

Apps rely on System APIs to make use of a device’s inbuilt functionalities. Root privileges allow the user to modify any system component, and even to replace the entire system with a custom one. The risks to apps and app users are clear; nothing on the device is off limits.

For example: imagine you have a corporate mail application installed on your rooted device. You have a list of contacts there and your emails as well, including contracts and financial reports. Such apps typically cache the emails and files to the disk. If the Android Filesystem API itself is modified, then it’s possible to intercept every bit the app writes to the disk and write them to other locations as well; to send them over the network; or to upload them to a file sharing service.

Without root, and the custom modifications to the System APIs that it enables, this type of behaviour would be simply impossible: if it is not written into the particular System API, it cannot be done.

Why allowing root is risky

So far, we have focused mainly on the risks to the owner of the device. Many of these risks also apply, though, to mobile apps themselves and the organizations who publish them.

Essentially, as the examples above make clear, any security guarantees simply disappear once the user decides to root the device. User behaviour cannot be restricted in the same way either.

Rooted devices increase the risks for apps running on them of:

1. Spoofing, cheating, and abuse of core functionalities

2. User data theft

3. Financial theft

4. IP theft

5. Piracy, and DRM violations

Scenarios and examples are endless, but here are a few:

If you are the developer of a mobile game, you might offer users the chance to make in-app purchases to unlock some bonus features. But root may enable them to pick up those same bonuses without paying.

For a financial app, any successful attempt by an attacker to intercept a network call and change the addressee, for example, could come at a substantial cost for the victim. And it would also be bad news for the company behind the app, in reputational terms at the very least.

Any of the risk scenarios above (Malware, Man-in-the-Middle Attacks, System API Hijacking) might also easily lead to user data exfiltration, with attacks specifically targeting users who have rooted their devices.

How app developers can manage the risks of a rooted device

There are broadly only three options:

1. Accept

2. Prevent

3. Mitigate

Which approach you choose as an app developer will depend on a number of factors. But it will always be necessary to consider what the risks are of your app running on a rooted device, and whether those risks are acceptable to you.

At the light end of the risk spectrum, there are content-only applications - a museum exhibition app, for example. The user may not need to enter any personal data, and the app may not contain any highly sensitive IP or process any cryptographic functions. The organization and developers behind such an app may choose simply to accept the low-level risks.

At the other extreme, healthcare apps managing Personal Health Information (PHI), governed as it is by strict legislation in many countries, may consider the risks too great. In this case, the simplest solution is to implement a root detection mechanism and to prevent the app from running on a rooted device. We will look into the practicalities of this later in the article, too.

Financial app developers may be in a trickier situation. Mobile banking is now a necessity for most customers, and having a user-friendly app to manage your finances is a major deciding factor when choosing a bank. Financial institutions therefore have a tough decision: whether to allow their app - with payment functionalities and highly sensitive data - to run on rooted/jail-broken devices, or to risk losing almost 10% of their target audience. For them, a middle ground might therefore be to mitigate the risks entailed by the app running on rooted devices.

There are a few methods for this, and it is worth combining them all to create the most comprehensive approach.

One is implementing a root detection mechanism and using the results not simply to block the app from running, but to introduce certain restrictions on end users whose devices are rooted. So, to block certain actions, to require additional authentication, to limit all files to readonly, or just to allow the user to explicitly accept the risks (and liability) for running the app on a rooted device.

How can this be done? Well, some techniques for root detection are quite well known: for Android the heuristics for detecting root include checking the buildtags, searching for the superuser.apk, trying to invoke ‘su’, or checking permissions for the /data folder.

But even detecting root - let alone implementing comprehensive anti-root measures - is not as simple as it may seem. Especially when you consider the risk of dynamic root access, the changing nature of the operating systems themselves (for example, direct file access has been managed much more strictly since Android 10), and constant updates to root and system customization tools, including innumerable root hiding mechanisms. It can be almost a full-time development job to keep anti-root measures up-to-date.

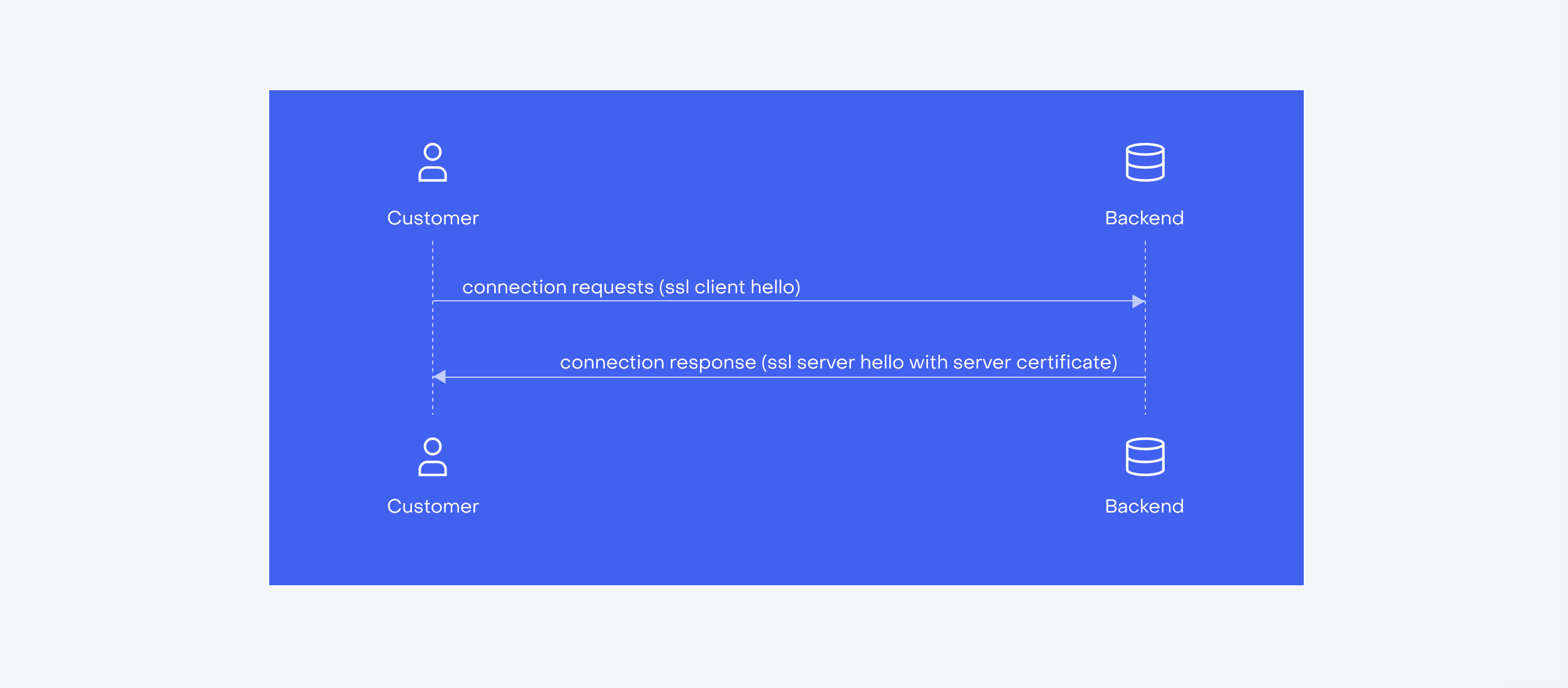

Another method involves directly preventing the specific risks that we have discussed above. For example, to prevent man-in-the-middle attacks, app developers may take the responsibility for checking certificates away from the system (which is vulnerable to root-based modifications). Instead they can pre-specify the legitimate certificates within the app itself, and implement checks which block any connections to domains that do not bear the ‘pinned’ certificate. This is SSL Pinning.

A simple diagram may be useful here:

When the device first attempts to establish a secure connection, the backend will reply with its certificate to prove that it is the expected, legitimate server. The mobile app then makes a cryptographic check against the certificate that is embedded into the mobile app itself. This overcomes the need to leave such checks to the system, since the system itself may be compromised.

And the same principle - of not allowing the app to fully trust an execution environment that may be insecure - should be applied to blocking other attack vectors that may exploit root privileges.

But what about trusting the app itself?

We have talked about not trusting the operating system, of not trusting the app’s execution environment. But what about trusting the app itself?

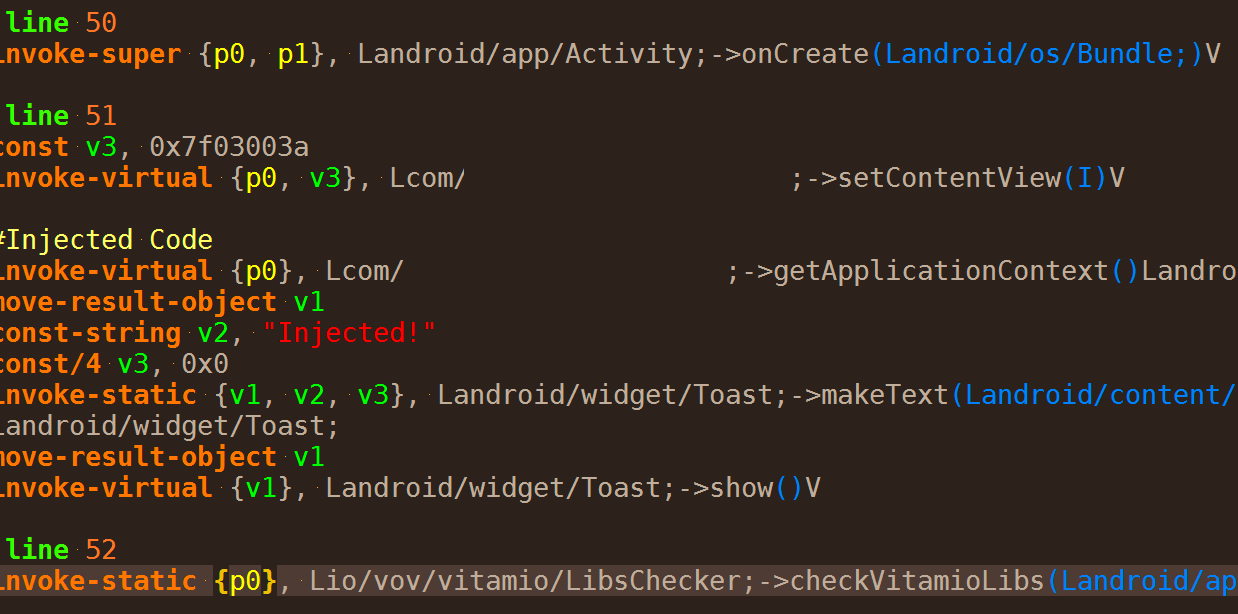

Root detection, enhanced authentication procedures, restrictive security policies, SSL Pinning -- all of these defense mechanisms are based on functionalities within the app. But an app can be tampered with and modified just as well the system; what then becomes of the defense mechanisms?

The tampering process can be relatively simple. The attacker downloads a target APK and unzips it (yes, an Android app is just a signed zip archive), decompiles the dex files into bytecode (the SMALI format, which can be relatively easy read and modified), and then using much the same tools recompiles the app and signs it with their own certificates.

This is why it is so important to guarantee that two fundamental principles of application security are upheld:

Application Hardening

Mobile app hardening means not just obfuscating, but also encrypting, virtualizing, and isolating the code and resources within your app. This makes them much more difficult to reverse-engineer and exploit.

Application Integrity

The integrity of your app needs to be checked and guaranteed during runtime. Without integrity, other security measures can be removed or overridden, and the application’s core functionalities can be tampered with. When we refer to mobile app integrity, we mean that users are able to trust that the application is the one it purports to be. So, integrity needs to be verified from your app being uploaded to an app store through to it being downloaded to the user’s device, right up to launch and during runtime.

That means integrated checksum and hash verifications based on all of the contents of the app (to ensure that the bits on an end user’s device are identical to those that the developer published), combined with checks on signing certificates, and guarantees that the app was installed from a trusted platform. If the app doesn’t comply with any of these requirements, it shouldn’t be able to run.

Conclusion

So, to return finally to the merits of accepting, preventing, and mitigating the risks of allowing an app to run on a rooted device, which is the best approach for you?

Moral, legal, and technical considerations will all have to play a role in your decision.

Firstly, it is reasonable to think that the user should be free to do whatever they like with their own piece of hardware. In some jurisdictions, though, it may be a legal requirement not to allow the app to run on rooted devices. In others, it may be the developer's responsibility to make clear the risks and potential consequences of particular actions.

Other cases are not so clear cut; who is responsible if the personal health information from a rooted device ends up in the hands of bad actors? What happens if the user loses money due to a virus operating on a rooted device?

These questions do not have easy answers, and every case is different.

Nevertheless, if security is a top priority for your app (and your users), there is every reason to prevent it from running on a rooted device.

If you would prefer to mitigate the risks, then we have seen some approaches to doing so -- the combinations of root detection, enhanced authentication procedures, restrictive security policies, and SSL Pinning -- which can be highly effective.

Whichever path you choose, though, you will need to take a holistic approach to security, taking measures to ensure that your app itself is trustworthy enough to run in an untrusted environment, and exist in a zero-trust world.